We are always happy to share source code or data from papers and projects, as long as appropriate credit/citation is given for any use of our work and it is not being used for commercial purposes. Email Laura (waller @ berkeley [dot] edu) for code requests from specific papers, or see below for some open source datasets and codes. If you use this code/data, we’d love to hear about what you do with it!

3D Multiple-Scattering Models |

|

|---|---|

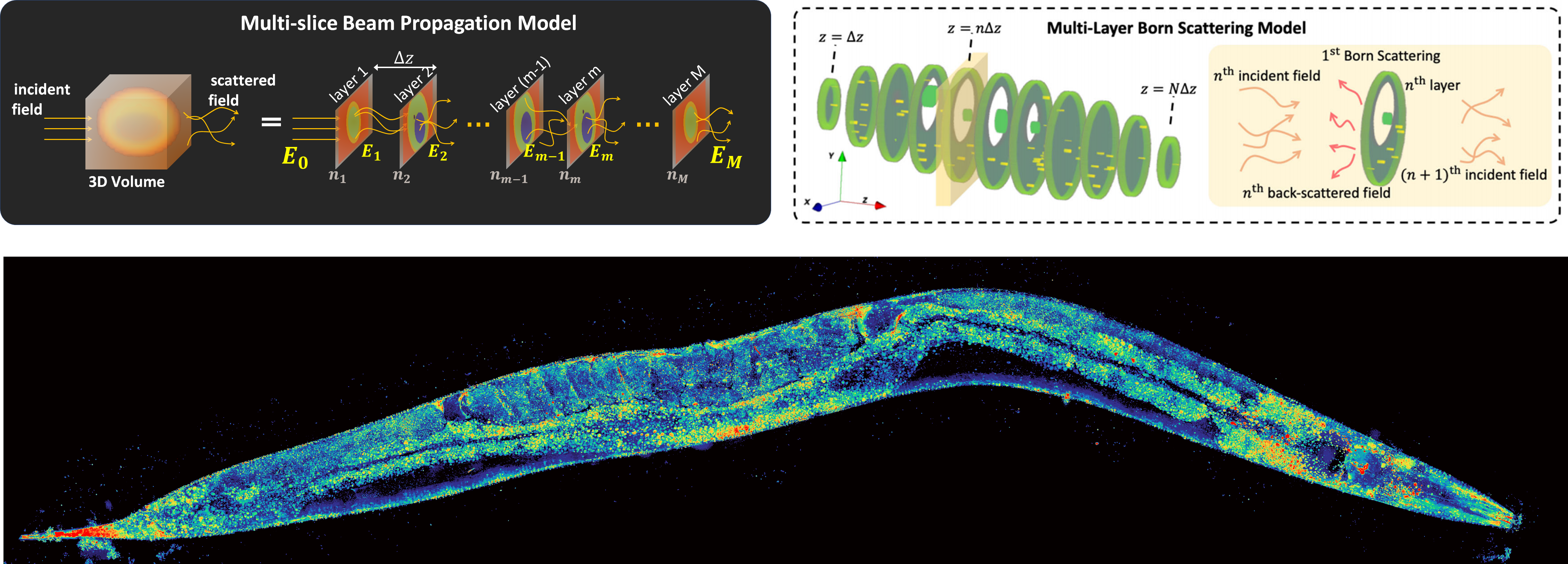

Multi-slice beam propagationProvides Matlab code for the multi-slice beam propagation scatting model for 3D refractive-index reconstruction of multiple-scattering objects. |

Multi-layer BornProvides Python code for the multi-layer Born scattering model for 3D phase-contrast optical diffraction tomography. |

|

|

|

Please cite as: |

Please cite as: |

Physics-Based Machine Learning |

|

|---|---|

Physics-based learning demonstrationThe goal of this tutorial is to explain step-by-step how to implement physics-based learning for the rapid prototyping of a computational imaging system. We provide a basic overview of physics-based learning, the construction of a physics-based network, and its reduction to practice. Specifically, we advocate exploiting the auto-differentiation functionality twice, once to build a physics-based network and again to perform physics-based learning. Thus, the user need only implement the forward model process for their system, speeding up prototyping time. We provide an open-source Pytorch implementation of a physics-based network and training procedure for a generic sparse recovery problem.

Please cite as: |

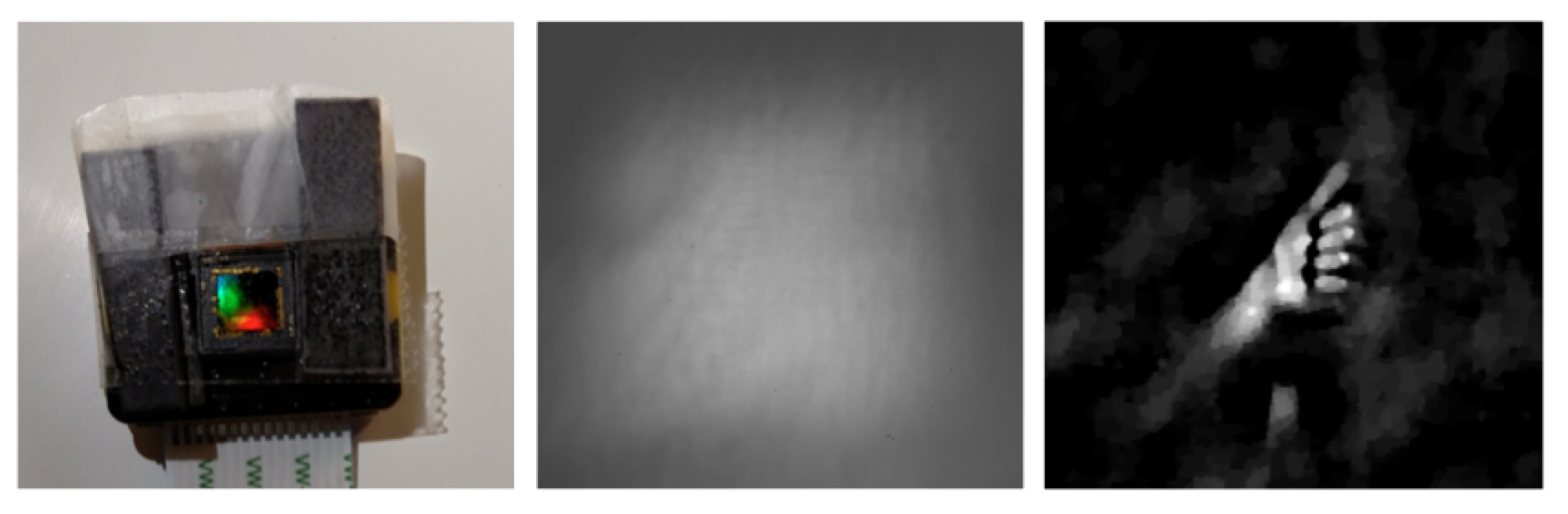

Physics-based learning for lensless imagingThis page provides an example of an unrolled, physics-based network for lensess imaging reconstructions, using the alternating direction method of multiplers (ADMM) as the foundation of a physics-based network. In addition, a large dataset of 25,000 aligned lensless (DiffuserCam) and lensed images are provided. These can be used to try out different learning-based inverse algorithms. Please cite as: |

DiffuserCam |

|

|---|---|

DiffuserCam reconstruction algorithmMatlab code implements the alternating direction method of multipliers (ADMM) reconstruction algorithm for recovering images from diffuser-based lensless cameras. The algorithm solves an iterative least-squares problem to recover either 2D pictures or 3D data cubes from DiffuserCam raw data. Sample DiffuserCam data is provided.

Please cite as: |

(Design) Build your own DiffuserCam

Please cite as:

|

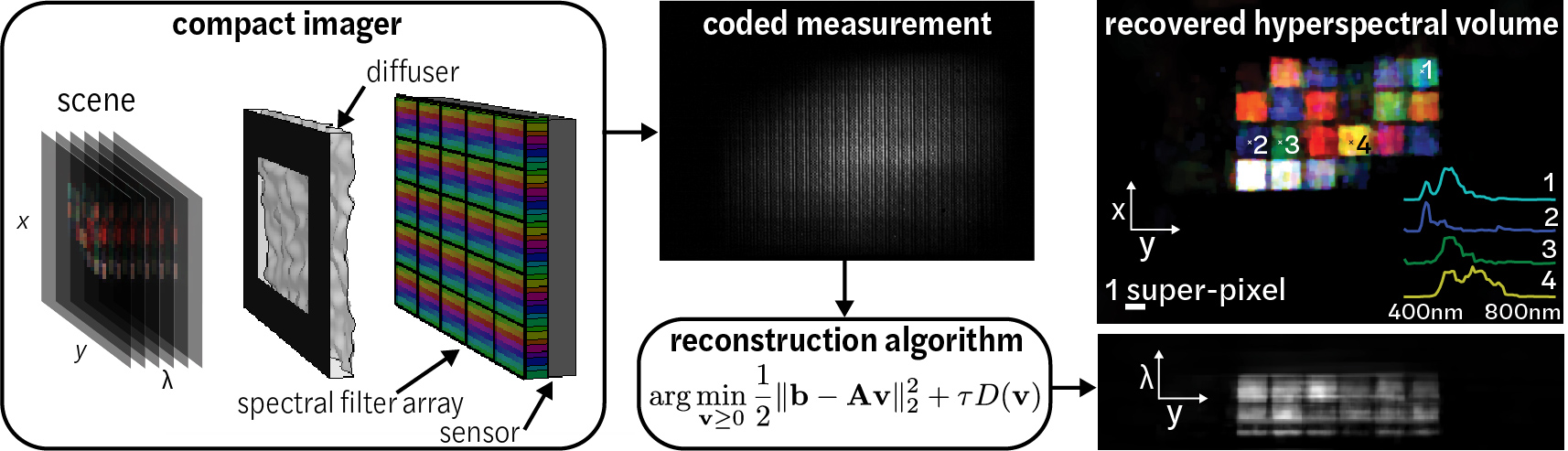

Spectral DiffuserCam reconstruction algorithmPython and Matlab code implements the Fast Iterative Shrinkage Thresholding Algorithm (FISTA) for recovering images from a hyperspectral diffuser-based lensless cameras. The algorithm solves an iterative compressive-sensing-based reconstruction problem to recover a 3D hyperspectral data cube from Spectral DiffuserCam raw data. Sample Spectral DiffuserCam data is provided. Please cite as: |

|

Microscope control |

|

|---|---|

Pycro-managerPycro-Manager enables Python control of Micro-Manager (an open-source microscopy control software) as well as providing a simple interface for the development of customized experiments using complex, automated microscopes. It is built to handle the requirements of modern, data-intensive microscopy, and it provides capabilities for integrating real-time image processing for adaptive experiments. It is compatible with hundreds of microscope components and full microscopes. |

|

Structured Illumination with Speckle Illumination |

|

|---|---|

High content speckle structured illumination microscopyProvides Matlab and Python implementations of a coherent/fluorescent structured illumination microscopy algorithm with random speckle illumination. Please cite as: |

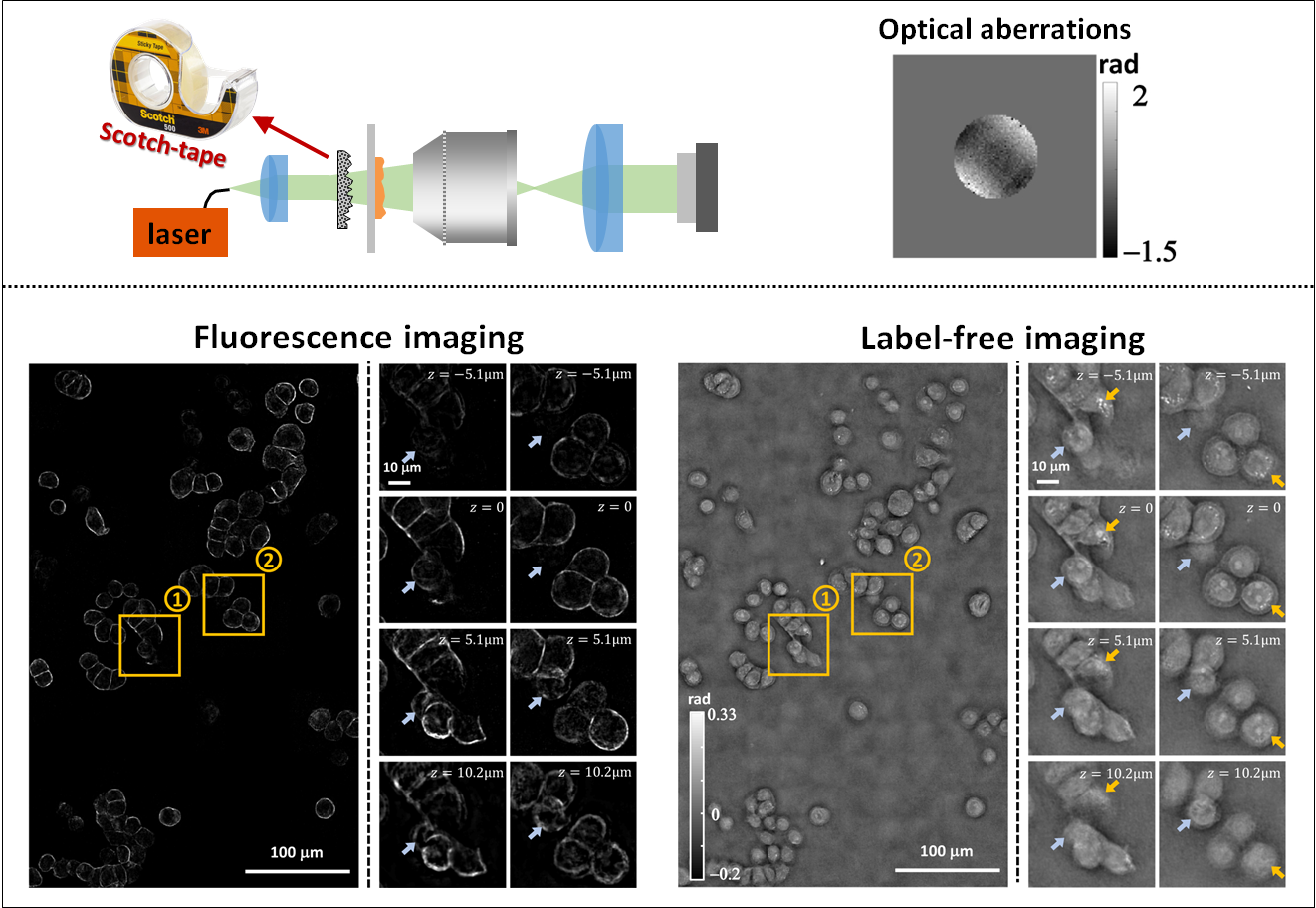

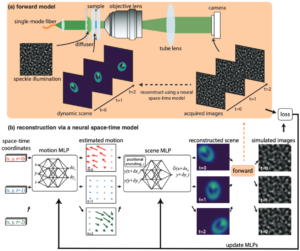

Structured illumination microscopy of dynamic samplesProvides Python code implementing the gradient descent solver for the neural space-time model used in the Speckle Flow SIM method. Speckle Flow SIM is a new structured illumination microscopy (SIM) technique that uses static patterned illumination on a dynamic scene and models the space-time relationship to super-resolve the dynamic scene. Please cite as: |

Differential Phase Contrast |

|

|---|---|

Quantitative Differential Phase Contrast (DPC) phase imaging with LED array (with Aberration Correction)The code provides the option to solve the DPC phase retrieval problem using two different algorithms. DPC Quantitative Phase Microscopy: **Run the “main_dpc.m” under matlab_code folder and set the variable “aberration_correction” to “false”, or open the “main_dpc.ipynb” jupyter notebook under python_code folder. DPC with Aberration Correction: 1. Download the minFunc package (https://www.cs.ubc.ca/~schmidtm/Software/minFunc.html). Please cite as: |

3D Quantitative Differential Phase Contrast (DPC) phase imaging with LED arrayThis repository provides Matlab and Python codes that implement a 3D refractive index reconstruction algorithm using 3D DPC through focus intensity measurements. Images should be captured with an LED array illumination or other switchable light sources, generating three(or more) half circular(or half annular) patterns. The 3D Weak Object Transfer Functions(WOTFs) are calculated according to the source patterns, pupil function, and the defocus step size. Finally, 3D refractive index are solved after a 3D deconvolution process. As in 2D DPC case, a least squares algorithm with Tikhonov regularization is implemented. Alternatively, deconvolution with total variation(TV) regularization and non-negativity constraint further mitigates artifacts based on a-priori knowledge of the object. GPU codes are available if hardware allows, which largely reduces computation time. In Python codes, we use ArrayFire library and its python wrapper for GPU processing implementation. **Run the “main_3ddpc.m” under matlab_code folder, or open the “main_3ddpc.ipynb” jupyter notebook under python_code folder. Please cite as: |

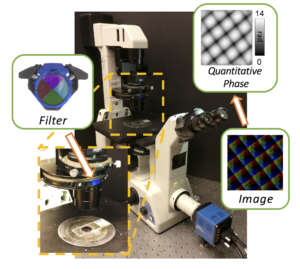

(Design) cDPC Filter

Please cite as:

|

|

Fourier Ptychography |

|

|---|---|

Data-driven design for Fourier ptychographic microscopyWant to learn the experimental design for your computational imaging system? Checkout our code/datasets here to learn the best LED patterns for Fourier ptychographic microscopy. Here, we provide an implementation of multiplexed Fourier ptychography in python/pytorch for learning the experimental design. Please cite as: |

Illumination angle self-calibration in Fourier ptychographyMatlab code implementation of circle-finding algorithm to calibrate the angles of illumination for brightfield images and extend the calibration to darkfield images. Circular edge detection image processing is done on the Fourier transform of the data to calibrate the brightfield illumination directly. Python code implementation of spectral correlation calibration method inside the Fourier ptychography algorithm. The angles are iteratively refined within the FPM algorithm by correlating overlapping spectra. Sample data is provided. Please cite as: |

Fourier ptychography reconstruction algorithm with quasi-Newton second-order optimizationMatlab code implements Fourier ptychography reconstruction algorithm from a set of images captured under different illumination angles (e.g. in an LED array microscope), using either sequential or multiplexed coded illumination. The algorithm simultaneously estimates the complex object function (amplitude + phase) withhigh resolution (defined by objective NA+illumination NA) and the pupil function (aberrations). It implements a sequential quasi-Newton’s method with Tikhonov (L2) regularization. Please cite as: |

(Dataset) LED array 2D Fourier ptychographyThis dataset (may take a long time to download!) contains images from 293 different LED illumination angles up to NA=0.5, captured with a 4x 0.1 NA objective. Fourier ptychography thus enables ~0.6 NA (our reconstruction is here). We use an Adafruit LED array placed ~6cm above the sample on a Nikon TE300 inverted microscope (more experimental parameters in text file). The sample is a dog stomach cardiac region histology sample. Please cite as: L. Tian, X. Li, K. Ramchandran, L. Waller, Biomedical Optics Express 5(7), 2376-2389 (2014). L. Tian, Z. Liu, L. Yeh, M. Chen, Z. Jingshan, L. Waller, Optica 2(10), 904-911 (2015). More datasets:

|

(Dataset) 3D Fourier ptychographyImages from sequential LED illumination up to 0.41 NA, using a 4x objective (0.1 NA). We achieved resolution corresponding to NA~0.5 with a multiple scattering multislice model that combines 3D light field refocusing with FPM to achieve super resolution in both lateral (x,y) and axial (z) dimensions. Please cite as: L. Tian, L. Waller, 3D intensity and phase imaging from light field measurements in an LED array microscope, Optica 2, 104-111 (2015). More datasets: |

|

Phase from Defocus |

|

|---|---|

2D phase retrieval from coherent defocus stack via nonlinear optimizationThis code is for recovering complex field at focus (phase and amplitude) from a coherent defocus stack. The defocus stack includes multiple intensity images, which are measurements of through-focus coherent complex fields. It could be measured on a microscope by changing focus distance. The reconstruction method in the code obtains an estimated complex field at focus by solving a nonlinear optimization problem. The optimization problem minimizes the mean squared error between the measured defocus stack and the predicted measurement, which is obtained by propagating the complex field at focus. The nonlinear optimization problem is solved with a modified Gauss-Newton method. Please cite as: |

2D phase retrieval from partially coherent defocus stack via nonlinear optimizationThis code is used to recover phase and source for partially coherent defocus stack by nonlinear optimization. Please cite as: |

(Dataset) Phase from defocus cheek cellThis 68.2 MB image dataset contains a stack of 129 through-focus images from a Nikon TE300 microscope, captured with a piezo axial motion stage (Thorlabs PZ). Experimental parameters are listed in a separate text file included in the zip file download. The sample is unstained cheek cells from Jingshan Zhong’s mouth, placed on a microscope slide and sealed with a coverslide. This represents nearly a pure phase object, though there are some small specks of amplitude variation. Please cite as: Z. Jingshan, R. Claus, J Dauwels, L. Tian, L. Waller, Optics Express 22(9), 10661-10674 (2014). |

|

Older Work |

|

|---|---|

Light field refocusing and 3D differential phase contrast on LED array microscope:Matlab code implements the popular ‘shift-and-add’ light field digital refocusing algorithm. The code is designed for processing data captured by sequentially turning on an array of illumination angles on our LED array microscope. It then splits the data based on illumination angles according to the differential phase contrast (DPC) method to compute the phase gradient information of the sample. The same algorithms can be also used for lenslet-based light field microscope. |

Phase from defocus GUI:This Matlab based phase recovery GUI provides a platform for implementing various TIE-based methods and iterative phase retrieval on a stack of through-focus intensity images. Input stack can be a 3D Matlab matrix (*.mat) or a sequence of tiff images (registration GUI included). Defocus steps need not be linearly spaced. The user can choose between standard TIE, higher order TIE [1], iterative TIE [2], Gaussian Process TIE [3] or the Gerchberg-Saxton iterative method for large defocus distances (see Fienup 1982). Please cite as: G. Gunjala, L. Waller, Open Source PhaseGUI, UC Berkeley (2014) |

CalOptrics GPU toolbox:CalOptrics is an open source fast and easy-to-use C++ library for CUDA-based GPU computing of basic functions used in computational optical imaging. It uses an array-based function set which makes programming with CUDA easier than programming raw CUDA code. Underneath the hood, CalOptrics is powered by the Thrust, Cusp, and other CUDA open source libraries and designed using Object-oriented and abstraction techniques like interfaces to make modifications to raw lower-level implementations simple and quick. The design also makes it easy to implement a non-CUDA version like OpenCL to be used in this library. Please cite as: D. Ramalingam, L. Waller, CalOptrics Open Source, UC Berkeley (2014).

|

|

Please see the Waller Lab Github Page for our latest open-source software and hardware.

One of the best things about DiffuserCam is that it is easy to build your own! We provide a guide on how to build your own lensless camera for 2D photography. We recommend using a Raspberry Pi camera with scotch tape as the diffuser. We will also walk you through the algorithms, step-by-step, in an iPython notebook. This tutorial contains all of the information you need to build your own DiffuserCam and is suitable for class projects.

One of the best things about DiffuserCam is that it is easy to build your own! We provide a guide on how to build your own lensless camera for 2D photography. We recommend using a Raspberry Pi camera with scotch tape as the diffuser. We will also walk you through the algorithms, step-by-step, in an iPython notebook. This tutorial contains all of the information you need to build your own DiffuserCam and is suitable for class projects.