| Our research covers optics, signal processing, computer algorithms and system design. Optical waves can be treated in a signal processing framework, where optical beams are represented as complex fields split up into spatial frequencies and temporal frequencies (color). Coherence is the 2nd order correlation function of a statistical optical beam. We aim to measure and control all of these parameters for better imaging systems. | |

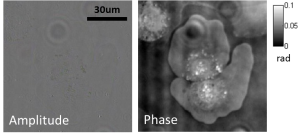

Phase imaging

|

|

LED array microscopeWe work on a new type of microscope hack, where the lamp of a regular microscope is replaced with an LED array, allowing many new capabilities. We do brightfield, darkfield, phase contrast, super-resolution or 3D phase imaging, all by computational illumination tricks. Click the photo to the right to see high resolution phase images across a huge field of view. L. Tian, X. Li, K. Ramchandran, L. Waller, Biomedical Optics Express 5(7), 2376-2389 (2014). |

|

|

|

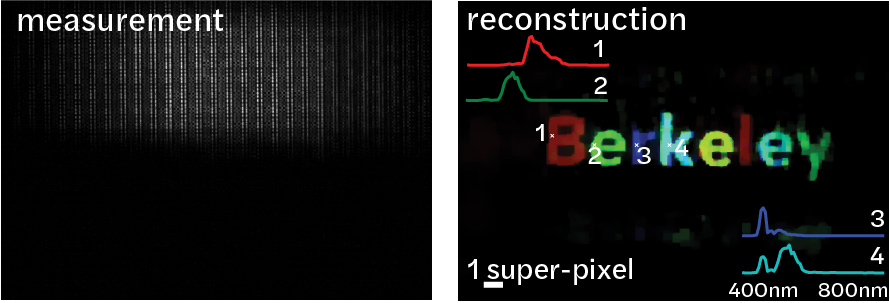

Lensless single-shot video and hyperspectral imagingBuilding from our DiffuserCam work, we’ve extended our lensless camera to two new dimensions: wavelength and time. Single-shot Hyperspectral: Spectral DiffuserCam, combines a spectral filter array and diffuser in order to capture single-shot hyperspectral volumes with 64 spectral bands in a single image with higher resolution than would be possible with a lens-based system.

Check out the Spectral DiffuserCam project page for more information. Single-shot Video: For time, a diffuser is used to encode different time points by leveraging a camera’s rolling shutter, enabling the recovery of 40 video frames at over 4,500 frames per second from a single image. Both systems can be very cheap, compact, and easy to build. |

|

Data-driven adaptive microscopyUsing a combination a physics-based modeling and machine learning, microscopes can adaptively image samples based on feedback from the sample itself Single shot autofocus Using only the addition of one or a few LEDs as an illumination source, a physics-based neural network can be used to focus a microscope based on a single image of the sample. [Tutorial]

|

Learned adaptive illumination multiphoton microscopy |

Structured illumination & imaging with scatteringWe adapt the existing framework for structured illumination (SI) super-resolution microscopy towards SI imaging with unknown patterns. This allows super-resolution fluorescent reconstruction of biological samples after illuminating with unknown and uncalibrated patterns, and has applications when imaging through aberrations or turbid media. Furthermore, this enables high throughput fluorescence imaging – Click the photo to the right to see a high resolution fluorescent image across a large field of view. We further develop new scattering models and apply them to reconstruct increasingly complex samples.

|

|

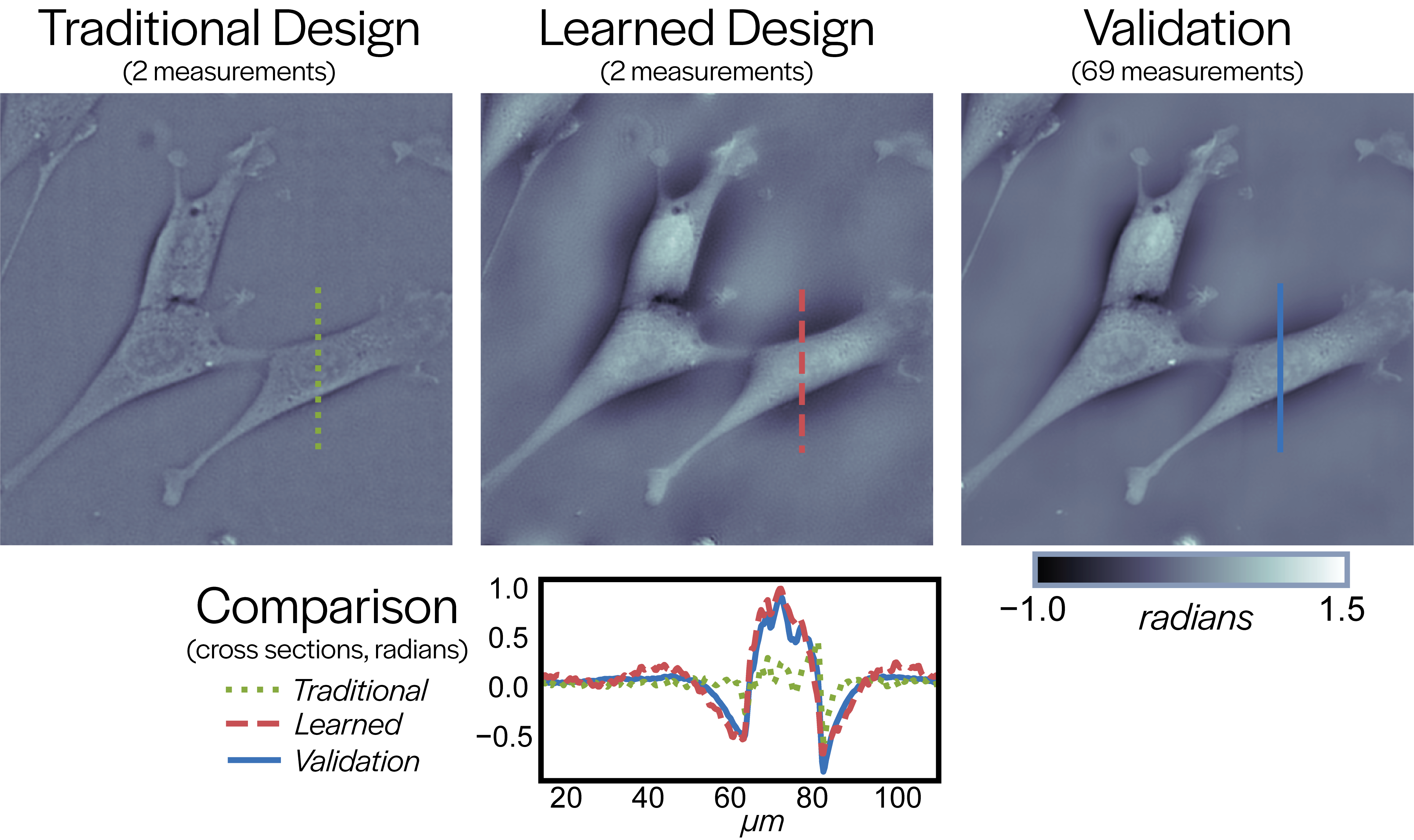

Machine learning for computational imagingComputational imaging lives at the intersection of hardware and software. Machine learning provides us with the opportunity to jointly optimize both hardware and software systems. We investigate various machine learning techniques to efficiently design a computational imaging system to maximize its overall performance.

|

|

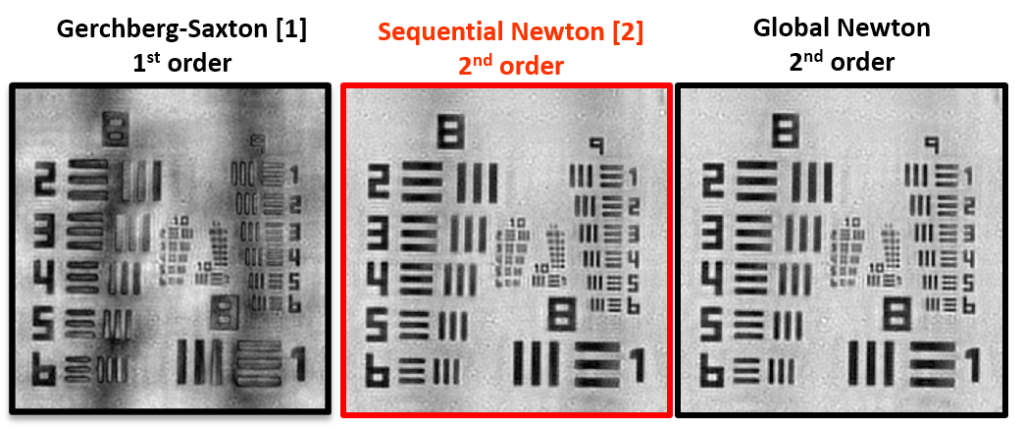

Fourier ptychography algorithmsThe algorithms behind achieving large field-of–view and high resolution are rooted in phase retrieval. We use large-scale nonlinear non-convex optimization, much like neural networks in machine learning, but we have new challenges for imaging applications. R. Eckert, Z.F. Phillips, L. Waller, Applied Optics 57(19), 5434-5442 (2018). |

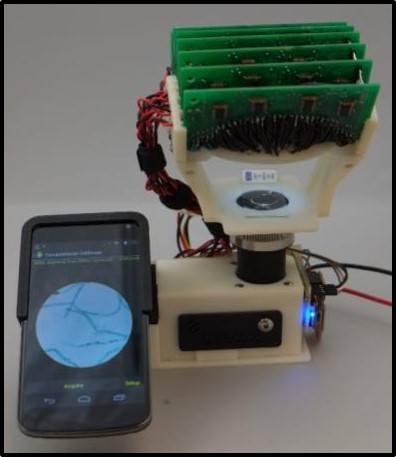

Computational CellScopeIn collaboration with the CellScope group at Berkeley (Fletcher Lab), we work on computational illumination and algorithms for cell-phone based microscopes – in particular, the CellScope. |

|

10x, NA~0.25, 10 Hz, Elegans (Dillin lab) |

Real-time multi-mode microscope systemWe show here a single-camera imaging system that can simultaneously acquire brightfield, darkfield and phase contrast (DPC) images in real-time. Our method uses a programmable LED array as the illumination source, which provides flexible patterning of illumination angles. We achieve a frame rate of 50 Hz with 2560X2160 pixels and 100Hz with 1920X1080 pixels, with speed only limited by the camera. |

|

|

Funding:

DiffuserCam: Lensless imaging

DiffuserCam: Lensless imaging